Object counting is pivotal for understanding the composition of scenes. Previously, this task was dominated by class-specific methods, which have gradually evolved into more adaptable class-agnostic strategies. However, these strategies come with their own set of limitations, such as the need for manual exemplar input and multiple passes for multiple categories, resulting in significant inefficiencies. This paper introduces a new, more practical approach enabling simultaneous counting of multiple object categories using an open vocabulary framework. Our solution, OmniCount, stands out by using semantic and geometric insights from pre-trained models to count multiple categories of objects as specified by users, all without additional training. OmniCount distinguishes itself by generating precise object masks and leveraging point prompts via the Segment Anything Model for efficient counting. To evaluate OmniCount, we created the OmniCount-191 benchmark, a first-of-its-kind dataset with multi-label object counts, including points, bounding boxes, and VQA annotations. Our comprehensive evaluation in OmniCount-191, alongside other leading benchmarks, demonstrates OmniCount's exceptional performance, significantly outpacing existing solutions and heralding a new era in object counting technology.

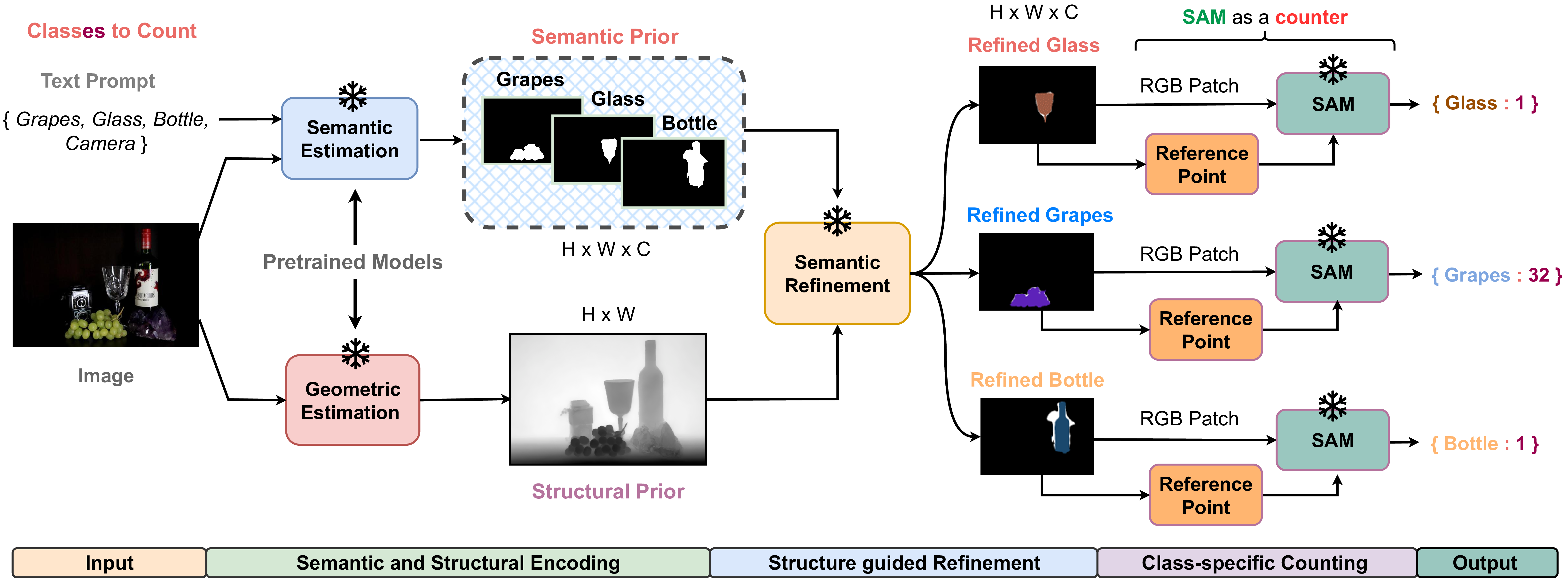

OmniCount Pipeline: Our method starts by processing the input image and their target object classes, using Semantic Estimation and Geometric Estimation modules to generate class-specific masks and depth maps. These initial priors are refined with a Semantic Refinement module for accuracy, creating precise binary masks of target objects. The refined masks help in obtaining RGB patches for each class and also extracting reference points to reduce overcounting. SAM uses these RGB patches and reference points to create instance-level masks, yielding precise object counts. ❄ represents frozen pre-trained models.

Potatoes: 4, Apples: 2, Bananas: 3, Onions: 4

Crow: 9, Pigeons: 10

Jackfruit: 1, Lichi: 12, Dragonfruit: 1, Pears: 27, Coconut: 3, Pineapple: 2

Dog: 1, Cats: 1, Rabbit: 1, Bird: 1, Guineapig: 1, Boar: 1

Cars: 7

Strawberries: 16, Kiwis: 14

Baseball field: 4

Elephant: 1, Buffaloes: 3

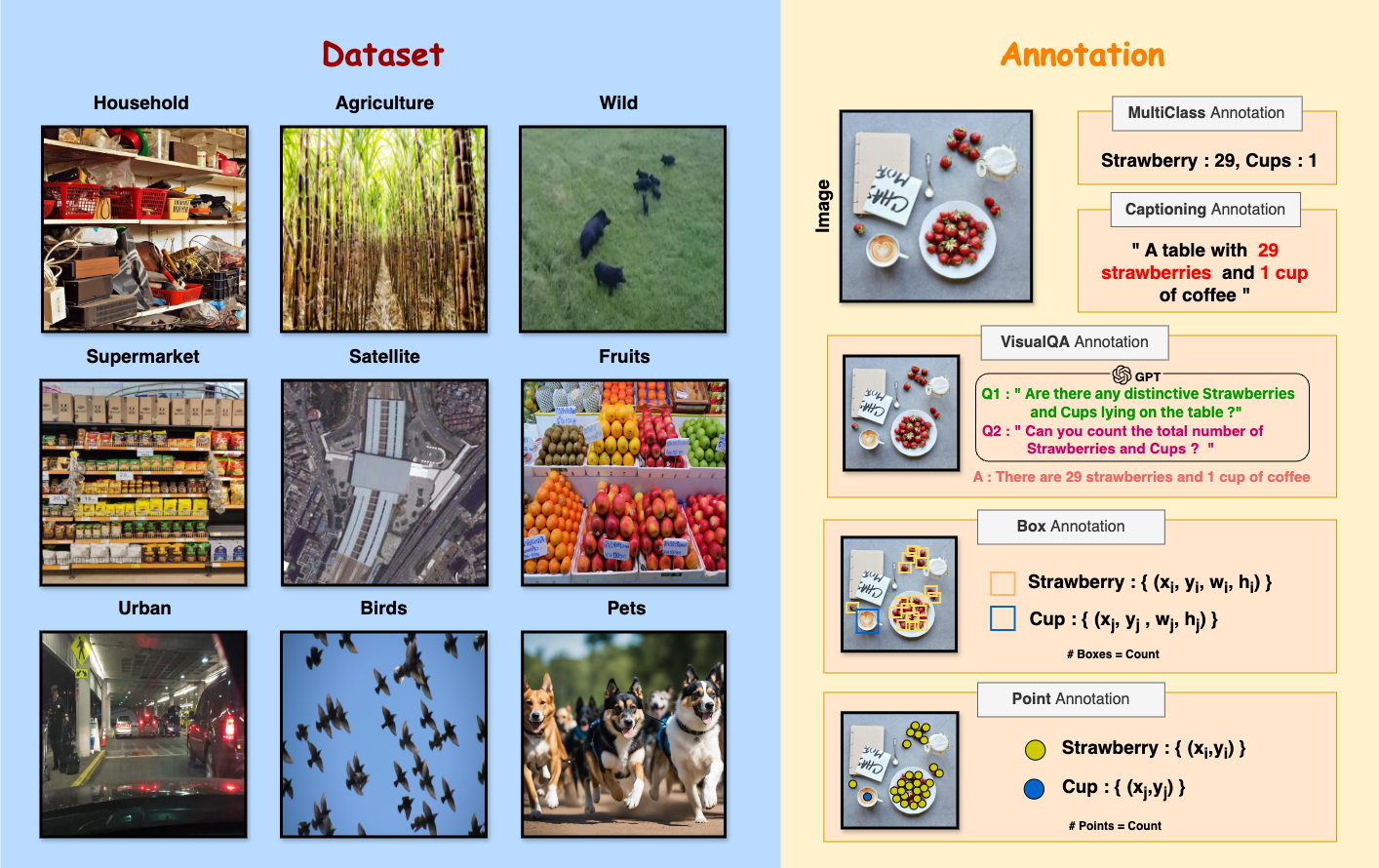

OmniCount-191: A comprehensive benchmark for multi-label object counting. The dataset consists of 30,230 images with multi-label object counts, including points, bounding boxes, and VQA annotations. For more details, please visit our Hugging Face page.

@article{mondal2024omnicount,

title={OmniCount: Multi-label Object Counting with Semantic-Geometric Priors},

author={Mondal, Anindya and Nag, Sauradip and Zhu, Xiatian and Dutta, Anjan},

journal={arXiv preprint arXiv:2403.05435},

year={2024}

}

Object counting has legitimate commercial applications in urban planning, event logistics, and consumer behavior analysis. However, said technology concurrently facilitates human surveillance capabilities, which unscrupulous actors may intentionally or unintentionally misappropriate for nefarious purposes. As such, we must exercise reasoned skepticism towards any downstream deployment of our research that enables the monitoring of individuals without proper legal safeguards and ethical constraints. Therefore, in an effort to mitigate foreseeable misuse and uphold principles of privacy and civil liberties, we will hereby release all proprietary source code pursuant to the Open RAIL-S License, which expressly prohibits exploitative applications through robust contractual obligations and liabilities.